At the beginning of the 15th century, the whole world was known as Asia, Europe, the explored shores of Africa and India. For the Europeans who never left their soil and satisfied their every need from China, there was nothing across the Atlantic. Whoever wanted to sail towards that direction were believed to enter boiling waters or swallowed by the ocean. For the cartographers of the era, the best thing to do is to draw the earth with imagination, labelling the other side of the ocean as “unreachable” and fill that area on the map with imaginary islands.

Christians appeared as successful traders and farmers who were obsessed with wars and feasts. The enjoyed living the life at it is and never looked for an adventure. As long as there were enough wars and food for them, exploring the rest of the world and finding out how it looked and it hid was pointless. During the 1,500 years exploited by ignorance and harsh authority of the church, Crusaders represented a huge obstacle in the way of scientific developments of Europe. In the year 391, they encountered the famous library which was built by Alexander The Great and contained thousands of papyrus gathered from the four corners of the world. They burned it to the ground along with the city, thus destroying knowledge of thousands of years. One of the masterpieces among the thousands which could help humanity to open the doors of a golden age was Ptolemy’s world map, belonging to 1st and 2nd ages.

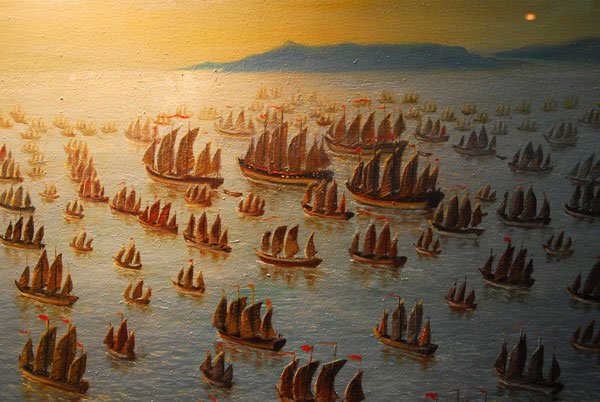

Again, in the 15h century, China has succeeded in collapsing the Turkish states by inside jobs and managed to get rid of them at last. With a population exceeding 100 million and owning an enormous naval power, China quickly became the strongest trading country in the known world. By the order of the then emperor, a sailor going by the name Cheng Ho mustered a 37.000 strong fleet consisting of 317 ships and started to explore the world. Cheng Ho, managed to explore the whole shores of China, along with the Persian Gulf and the Red Sea, thus expanding China’s trade routes even more. Ho even had the chance to go further and reach Europe by going around Africa.

The admiral ship of Cheng Ho’s fleet was 135 metres long. Santa Maria, which carried Christof Colombus to the shores of San Salvador in 1492, was only 27 metres long. By 1405, China controlled almost all of the known routes and didn’t feel the desire to explore the rest of the world, believing they had all they already wanted. Thus, the Chinese stayed in their lands too. On the other hand, by the best struck of luck, a copy of Ptolemy’s book, “Geography” was found and immediately translated into Latin.

Christians, who burned the whole knowledge of the world in 391, found out that it is round in the year 1406…

The Smell of Spice Disrupted the Sleep of Europeans

The deliberately corrupted holy book of the Crusaders placed Jerusalem at the centre of the world. That is why, between the years 1095 and 1271, in total 12 Crusades took place. In the era of the Crusades, Christians finally manage to broke free of the authority of the church and realized the riches the world has to offer. Yet, trading was still a huge challenge.

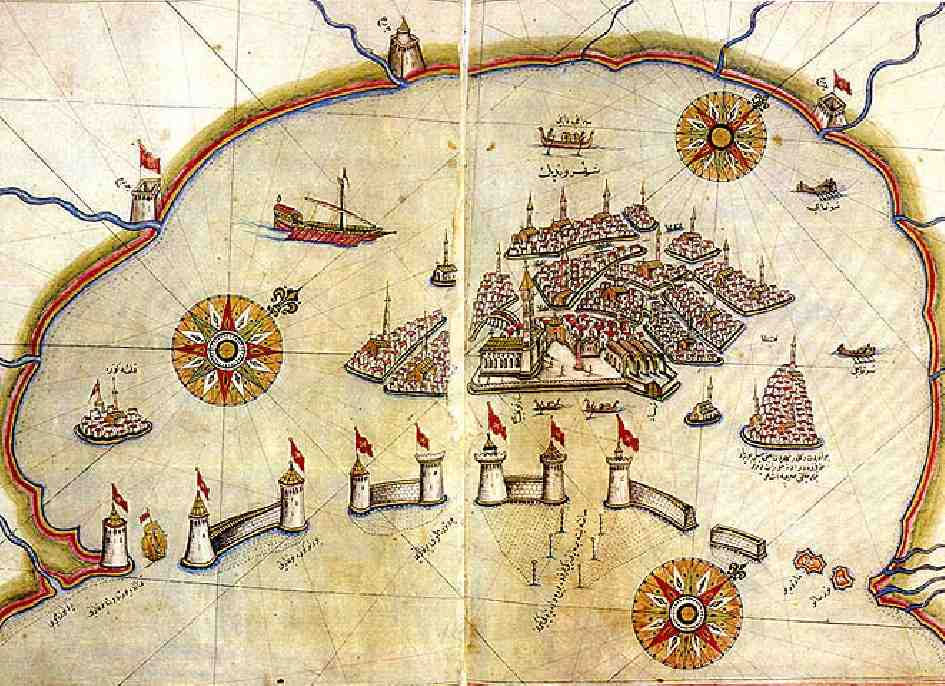

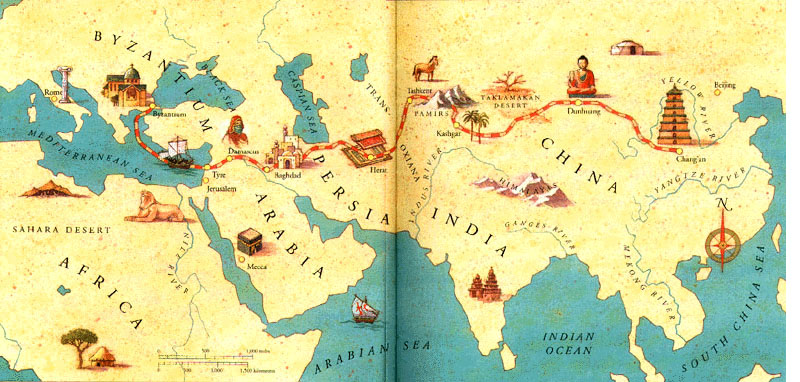

Europeans adored eating meat. The meat was enjoyed the most with wine and spices. The only source of the spice of Europe in the middle ages was Egypt and other Muslim countries. The spice trade, on the other hand, was in the hands of two Italian cities, Venetia and Genova. But the price they asked for spice was so high, in some parts of Europe the flavouring was even used as a form of money. One who couldn’t afford those prices had to wait the caravans coming from the Silk and Spice Roads, through İstanbul (Constantinople).

There must have been a way to reach spice and other goods more easily! This way was sitting on a route travelling around the known shores of Africa and reaching Europe. But, how was it to be explored?

Pessimism is one thing. But for an ultimate change of history, only one person with a different perspective was enough. This person was the Portuguese King Henry (who is also known in history as sailor Henry). Henry was interested in mathematics and astronomy, was not filling the voids in reality by completing the missing information in the Bible but prying on them with curiosity. According to him, a solution must be reached step by step, with utter care. Henry was going to use this approach to go beyond the known shores of Africa and explore the mysteries waiting there.

Europe Wakes Up

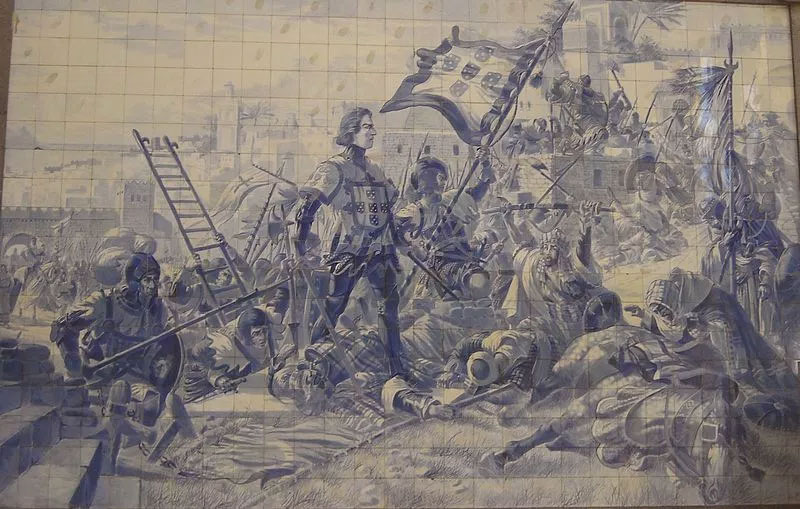

In the middle ages, Christianity aimed to declare the whole (known) world as Christian. Therefore, kings leading the Crusader armies had to wear elegant clothes and looked charismatic. Henry, who started the invasion of Africa at the age of 21 by capturing the modern-day Moroccan city Ceuta sitting across Gibraltar, looked like a truly Christian king with his colourful clothes made of the highest quality of silk.

The last emperor of Byzantine, Constantine too, was wearing elegant clothes when he fell to the swords of Janissaries, at the walls of Constantinople. It was not until the next day, he was recognized among the pile of the dead by his fancy eagle sewn royal purple shoes…

After capturing Ceuta, Henry’s eyes were dazzled with the riches of the East. The city was full of marvellous riches of the world. Among the trophies, there were gold, silver, Indian silk, sacs full of cinnamon, pepper, gillyflower and ginger. After the fall of the city, Muslims immediately ceased trade with Ceuta. But for Henry, who was trying to find the route to the source of all these riches, reaching the Red Sea was a must. This route was going down along the unexplored shores of Africa and no one knew where it led to. The area which was called “The Green Sea of Darkness” by Muslims, was the place where the Christians believed to have boiling waters.

The Key of Breakthrough: The Caravel

While Henry was busy with his thoughts, two young sailors names John Gonçalves and Tristan Vaz, asked for a job. Henry sent the two adventurers to explore the shores of Africa. Gonçalves and Vaz, along with their friend Bartholomew Perestrelo, passed Canarian Islands and found the island of Porto Santo. But due to the pregnant rabbit Perestrelo brought along with him, the island came under the control of not humans but rabbits.

Leaving the island to the rabbits for good, Gonçalves and Vaz started a new journey under the order of Henry. This time, they explored the Madeira Islands. After these successes, Henry called the most famous geographer of the time, Master Jaime to his court. The king wanted a ship, which would explore the unknown shores in a few voyages. With taking advantage of the works of Jaime, Henry designed the ship which would be a breakthrough in the history of exploration: Caravel. Only 21 metres long, Caravel carried just 21 crew. To have good manoeuvrability and travel long distances, it had three triangular sails.

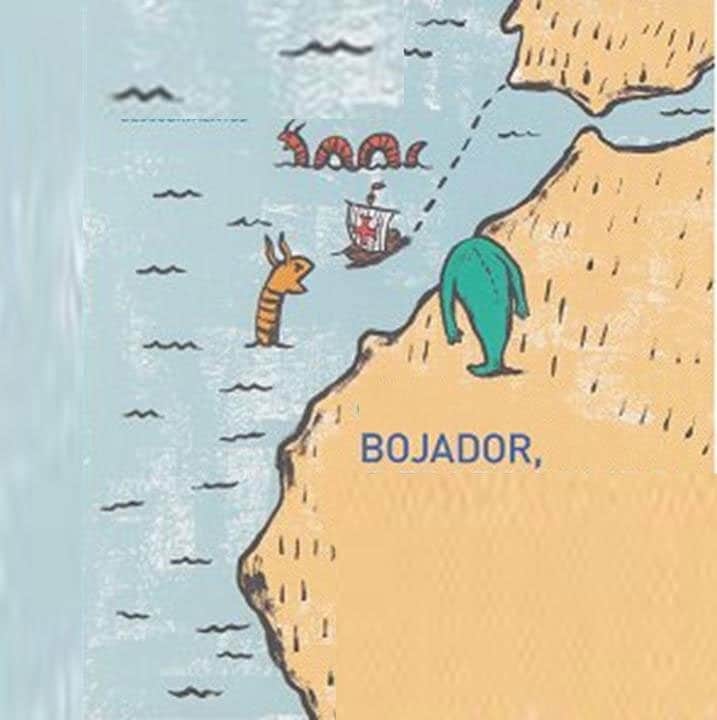

In the years between 1421-33, Caravel led the Portuguese sailors to reach the tipping point called Cape Bojador, to the west of Sahara Desert but could not go further. When the storms struck the sailors at that point, beliefs of encountering demons, yellow hurricanes and boiling waters were pushing the caravels back. But Henry had another virtue: Patience. He encouraged his sailors to go further. In 1434, the most expected thing happened and a young sailor by the name of Gil Eanes managed to turn around Cape Bojador. On the opposite of what was expected, none of the ships caught fire or sunk to the bottom of the ocean amid boiling waters. In the following years, Eanes managed to go 240 kilometres further. On the other hand, the Portuguese public was observing these futile attempts to explore Africa pointless while Henry was not spending any effort in bringing spices and silk to the country.

A New Invention: Slave Trade

In 1441, the brother of John Gonçalves, Antam was ordered by Henry to bring “whatever he finds” from Africa. So he came back with gold powder, ox skin, oyster eggs and 10 Africans. Henry, becoming the first known European to taste oyster eggs, said it was delicious. But, the main interest of the people was not the oyster eggs but the African slaves. To diminish the gap in the Portuguese working force, they started to force these half-naked “inferior” people, whose tongue was unknown to work.

In the other voyage came back 165 slaves, followed by 235 in the following one. After years of struggle for explorations, Portuguese sailors didn’t even manage to reach the tip of the continent but found the most interesting thing the looked for Slaves. In a short time, slaves were in such high demand, that a new form of trading emerged. Ships bound to Africa started to return with not goods but full of African women, children and men. The obsession of comfort and greed would soon turn slavery a routine of life in Europe.

In 1453, the course of history has changed dramatically. 23-year-old Sultan Mehmet captured Constantinople and not only changed the trade routes leading to Europe but the future of the world. It took only 54 days for the young Sultan to breach the strongest walls of the known world and capture the city. Europe was in total shock. Fatih closed the gates of the Silk and Spice roads and ended the routes lasted for centuries. Now, it was inevitable for the Europeans to explore the routes leading to India.

The Beginning of the Sad Demise of The Red Skins

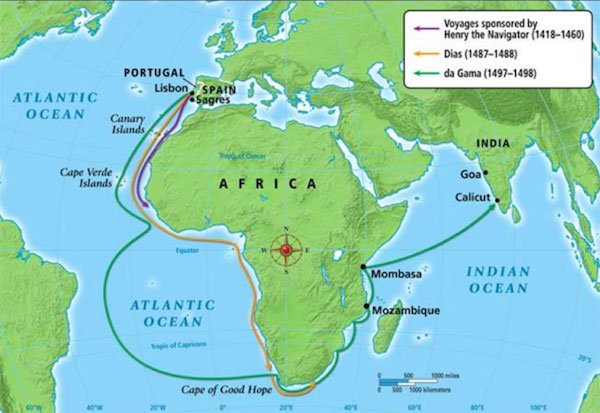

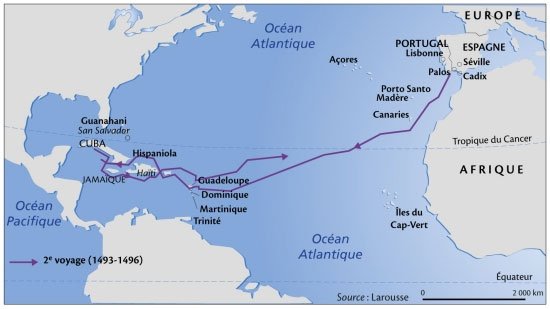

Following the 7 years of the fall of the Constantinople, Henry added 3,218 kilometres to the world map with his never-ending intelligence, courage and patience. The desire explorations fueled by him emerged again after it was unavoidable to find a way to end the bottleneck in trade routes. Bartholomew Diaz, who was assigned by King Alfonso, the successor of Henry, was to reach to the tip of Africa. But the man to follow him, Christof Colombus, let alone India was to achieve the most feared voyage and cross the Atlantic, and to reach Cuba.

Confused, Colobus called the native people “Indians” because he thought he was in India. From the first moment, the luck of these people took a wrong turn. In the years to come, Red Skins were to be butchered by the greed of Europeans, lost all of their treasures and lands.

Hail Greed!

With the start of the 16th century, Silk and Spice Roads start to lose their fame even more, while unlimited riches poured to Europe from the Americas. It was this moment, that Europeans finally started to move out of their continent and migrated to the new colonies established in the new world. They didn’t forget to take the African slaves among with them. After Fatih sealed the doors of the old world, the greed of the old continent unleashed itself in the new world as invasions, sackings and never-ending lust for treasures.

After the American Independence War, the US states divided into two as industrial and farming cultures. In the south, 4 out of the 9 million population were black slaves. Black people finally gained their freedom after the devastating war between the Rebels and the Yankees. Or did they? On the opposite, their suffering continued for decades as thew were constantly insulted and forced to work in the years of capitalisms awakening.

Today, in many African countries children start to work at the age of 4. Leading companies of the world employ thousands of children workers. Foxconn, which acts as the assembly line of worlds most valuable company Apple, is a good example. Today, we only serve to consumption where humanity has almost lost all of its ambition to explore new things like Henry did centuries ago.

This article was originally published on DijitalX TR by Müfit Yılmaz Gökmen.